- Get link

- X

- Other Apps

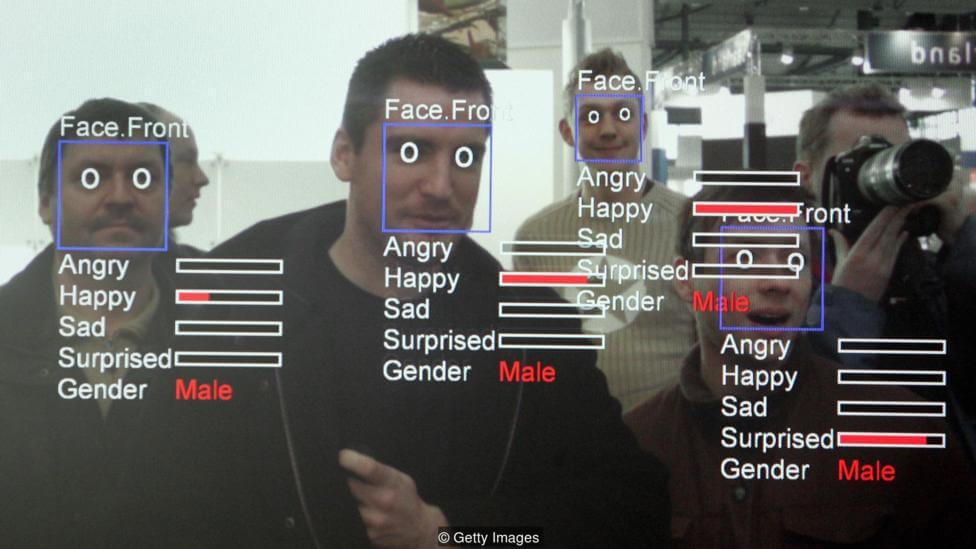

Artificial intelligence is already everywhere and will remain everywhere. Many aspects of our life in one way or another relate to artificial intelligence: he decides which books to buy, which tickets for the flight to order, how successful the submitted resumes are, whether the bank will provide a loan, and what cancer medicine to assign to the patient. Many of its applications on our side of the ocean have not yet arrived, but they will definitely come.

All of these things — and many others — can now be largely determined by complex software systems. The tremendous successes achieved by AI over the past few years are astounding: AI makes our lives better in many, many cases. The growth of artificial intelligence has become inevitable. Huge amounts have been invested in startups on AI. Many existing tech companies — including such giants as Amazon, Facebook and Microsoft — have opened new research labs. It is no exaggeration to say that software now means AI.

Some predict that the coming of the AI will be as big an event (or even more) as the advent of the Internet. The BBC interviewed experts that it is preparing this rapidly changing world, filled with brilliant machines, for us humans. And what is particularly interesting, almost all of their answers were devoted to ethical issues.

Peter Norvig, director of research at Google and a pioneer of machine learning, believes that data-based AI technology raises a particularly important question: how to ensure that these new systems improve society as a whole, and not just those who manage them. “Artificial intelligence has proven to be very effective in practical tasks - from marking photos to understanding speech and written natural language, identifying diseases,” he says. “Now the challenge is to make sure everyone uses this technology.”

The big problem is that the complexity of the software often means that it is not possible to determine exactly why the AI system does what it does. Due to the way modern AI works — based on a widely successful machine learning technique — it is impossible to simply open the hood and see how it works. Therefore, we just have to trust him. The challenge is to come up with new ways to monitor or audit many areas in which AI plays a big role.

Jonathan Zttrain, a professor of Internet law at Harvard Law School, believes that there is a risk that the complexity of computer systems may prevent us from ensuring an adequate level of verification and control. “I’m worried about the decline in human autonomy, because our systems - with the help of technology - are becoming increasingly complex and closely interconnected," he says. "If we" set up and forget, "we may regret how the system will develop and that we have not considered the ethical aspect earlier."

This concern is shared by other experts. “How can we certify these systems as safe?” Asked Missy Cummings, director of the Human and Autonomy Lab at Duke University in North Carolina, one of the first female pilots of a US Navy fighter, now an UAV specialist.

AI will require supervision, but it is not yet clear how to do this. “We currently have no generally accepted approaches,” says Cummings. "And without an industry standard for testing such systems, it will be difficult to widely implement these technologies."

But in a rapidly changing world, the settlement authorities often find themselves in a position of catching up. In many important areas, such as criminal justice and health care, companies are already enjoying the effectiveness of artificial intelligence, which decides on parole or diagnosing a disease. By transferring the right decision to machines, we risk losing control - who will check the correctness of the system in each case?

Dana Boyd, principal researcher at Microsoft Research, says that there are serious questions about the values that are written to such systems - and who is ultimately responsible for them. “Regulators, civil society and social theorists increasingly want to see these technologies fair and ethical, but their concepts are at best vague.”

One of the areas fraught with ethical problems is jobs, generally the job market. AI allows robots to perform increasingly complex jobs and crowds out large numbers of people. The Chinese Foxconn Technology Group, a supplier of Apple and Samsung, announced its intention to replace 60,000 factory workers with robots, and the Ford factory in Cologne, Germany, put robots alongside people.

Moreover, if increasing automation will have a big impact on employment, it can have negative consequences for people's mental health. “If you think about what gives people meaning in life, these are three things: meaningful relationships, passionate interests and meaningful work,” says Ezekiel Emanuel, bioethics and former health adviser Barack Obama. “Meaningful work is a very important element of someone’s identity.” He says that in regions where jobs were lost along with the closure of factories, the risk of suicide, substance abuse and depression increases.

As a result, we see a need for a large number of ethicists. "Companies will follow their market incentives - this is not bad, but we cannot rely on them to behave ethically correctly just like that," said Kate Darling, an expert on law and ethics at MIT. "We saw it every time a new technology appeared and we tried to decide what to do with it."

Darling notes that many companies with big names, such as Google, have already created ethics review committees that oversee the development and deployment of their AI. It is believed that they should be more common. “We do not want to stifle innovation, but we simply need such structures,” she says.

The details of who sits on Google’s ethics council and what they do are left vague. But last September, Facebook, Google and Amazon launched a consortium to develop solutions that will cope with the chasm of traps related to security and privacy of AI. OpenAI is also an organization that develops and promotes open source AI for the benefit of all. "It is important that machine learning is studied openly and distributed through open publications and open source so that we all can participate in the division of rewards," says Norvig.

If we want to develop industry and ethical standards and at the same time clearly understand what is at stake, it is important to create a brainstorm with the participation of ethicists, technology and corporate leaders. It is not just to replace people with robots, but to help people.

The article is based on materials .

- Get link

- X

- Other Apps

Comments

Post a Comment